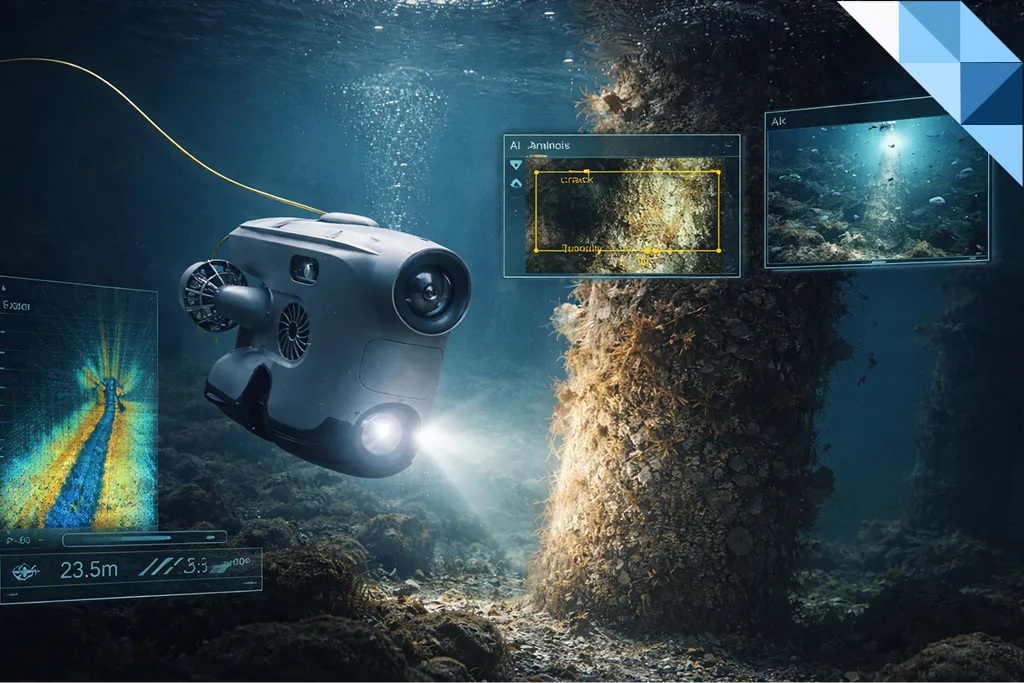

AI in underwater inspection augments inspectors—it does not replace them.

When applied correctly, AI accelerates screening, triage, and coverage proof across 4K laser-scaled video and sonar data, while human review, calibration discipline, and traceability remain mandatory for Class and Flag acceptance.

Used poorly, AI creates impressive demos that fail audits.

Used properly, it delivers faster reviews, cleaner evidence packs, and safer hand-offs to integrity and Risk-Based Inspection (RBI)—without changing acceptance rules.

Why This Matters for Inspection Managers & Integrity Leads

Most “AI for ROV inspection” claims collapse at acceptance:

- detections without scale,

- flags without traceability,

- outputs that cannot be audited or reproduced.

This guide shows where AI actually delivers ROI:

- reducing human review time,

- strengthening coverage proof in low visibility,

- structuring findings so integrity teams can act—

without risking Class acceptance or your RBI cadence.

For inspection levels, roles, and deliverables context, see Fixed Offshore Structures: Underwater Inspection Requirements under ISO 19902 (2020).

Where AI Fits in GVI / CVI / DVI (Screen → Verify)

AI’s role is screening and structuring, not final disposition.

GVI — Screen Fast

- Apply computer vision to stabilized 4K video to flag:

- coating loss and holidays,

- fouling patterns,

- likely anode wastage.

- Use confidence scores to prioritize human review—not to auto-decide.

CVI — Verify with Scale

- Enforce dual-laser scale on key frames.

- Require on-frame annotations (UTC, component ID, heading).

- AI flags become candidates, verified by qualified reviewers.

DVI — Compare & Detect Change

- Use AI for change detection between:

- pre-clean vs post-clean,

- campaign-to-campaign passes.

- Repair / monitor / accept decisions remain human.

For platform envelopes, optics, and payload headroom, see Underwater Inspection (ROV) under Inspection Services.

4K + Laser Scale: What AI Can (and Can’t) Do

What AI Can Do Reliably

- Detect defect candidates in high-quality footage:

- probable coating breakdown,

- under-film rust bloom,

- anode depletion shapes.

- Localize weld features (toes / HAZ) when:

- lighting is controlled,

- dual-laser dots are visible.

- Support change detection between defined states.

- Reduce human review time by ~40–70% in practice.

What AI Cannot Replace

- Acceptance decisions governed by standards or Class rules.

- NDT measurements requiring calibration, probe control, or electrochemical correctness (UT / CP).

For method fundamentals and acceptance logic, see What Is NDT? A Complete Guide to Techniques & Benefits and (for subsea execution discipline) ROV-Deployed NDT in Practice: UT & CP That Deliver Repeatable, Class-Ready Results.

AI Governance That Keeps You Safe

Model Performance Policy

- Publish precision / recall targets per feature class

(e.g. P ≥ 0.85 / R ≥ 0.70 for coating-loss candidates). - Set a false-positive budget

(e.g. ≤15% of flags per 1-hour segment).

Human-in-the-Loop (Mandatory)

- Every AI-flagged item requires human confirmation before entering the anomaly register.

- Log reviewer overrides (accept / reject) to improve future campaigns.

AI accelerates review—but never bypasses it.

Capture Discipline (Non-Negotiable for AI & Acceptance)

AI only performs as well as the footage allows.

- Stabilized HD / 4K with UTC timecode

- Dual-laser scale visible on key frames

- Consistent standoff

- Main + angled fill lighting to reduce glare on weld toes and glossy coatings

If these are missing, AI output is noise—not evidence.

AI + Sonar for Coverage Proof in Low Visibility

When optics degrade, sonar becomes the coverage record—and AI can help structure it.

What Works

- Imaging sonar for near-field plan/section views around complex geometry

- Multibeam sonar for mosaics over hulls, berths, pipelines, and harbor floors

- AI-assisted:

- lane segmentation,

- coverage gap detection,

- hit clustering.

Tag → Revisit Workflow

- Tag sonar hits with:

- lane ID,

- UTC time,

- vehicle pose.

- Revisit optically (CVI) or with UT / CP only if decisions require numbers.

What Class Expects

- Georeferenced mosaics with overlap and drift control

- Covered vs pending area maps

- Cross-links from maps to time-coded media

For the full low-visibility playbook, see Multibeam & Imaging Sonar as Coverage Evidence.

Photogrammetry with AI: Evidence Only When Measurable

AI can assist 3D reconstruction—but acceptance still depends on scale and uncertainty.

Decision-Grade Requirements

- Overlap ≥70% forward / ≥60% side

- Angle diversity (include orthogonal passes)

- Dual-laser scale and/or rigid targets

- Published QC:

- reprojection error,

- residuals,

- masked frames.

- One-page uncertainty table with units and confidence bands

If scale or uncertainty is unclear, treat 3D as illustrative—not decision-grade.

For full methodology, see Subsea Photogrammetry & Digital Twins: Scale Control, Pass Design, Uncertainty & Class Acceptance.

Packaging AI Outputs for Class Acceptance

AI only delivers value when its outputs flow cleanly into the dossier.

Minimum Per-Item Evidence

- Unique anomaly ID + component reference

- Location (coordinates or relative) + UTC timestamp

- Scaled frame(s) with visible lasers

- Time-coded video segment

- Reviewer decision (accept / reject; monitor / repair)

- Calibration references (camera, laser spacing, any UT / CP probes used)

Store this in your standard anomaly register (CSV/XLSX) so integrity teams can ingest it directly.

For turning verified findings into risk movement and inspection cadence, align with Risk-Based Inspection (RBI) (and, where you need a concrete data-to-plan bridge, use API 580/581 RBI Inputs to Plans).

Feeding AI Outputs into RBI

Treat AI as an uncertainty reducer, not a decision engine.

- Baseline: consequence classes and damage mechanisms

- Screen: AI accelerates GVI / CVI review

- Verify: targeted UT / CP where risk and flags intersect

- Trend: confirm or downgrade flags across campaigns

- Governance: version the RBI model; maintain a clear audit trail

This creates a defensible path:

AI screen → verified evidence → risk movement → interval change.

KPIs & Governance (What Builds Trust)

- Model quality: precision / recall per feature class

- Reviewer metrics: re-check rate, time-to-decision

- Data integrity: % frames with visible lasers, UTC sync pass rate

- Acceptance: first-pass acceptance ratio, rework requests per campaign

One clear KPI snapshot per campaign beats pages of claims.

Practical Capture Rules That Make AI (and Acceptance) Work

- Lighting: main + angled fill to reveal weld geometry

- Standoff: stay inside lens sweet spot

- Bitrate: avoid aggressive compression; keep masters intact

- Lasers: both dots visible, in plane

- Stability: use crawlers / magnetic aids for DVI zones if needed

- Pre/post-clean captures where sizing or confirmation matters

What Belongs to AI vs Humans

| Task | AI-Assisted | Final Responsibility | Evidence Required |

| GVI screening (coating/anodes) | Yes | Human reviewer | 4K frames with laser scale + UTC time reference |

| Change detection | Yes | Human reviewer | Like-for-like scaled frames (same geometry/angle/standoff) |

| Low-visibility coverage proof | Yes (sonar) | Human reviewer | Georeferenced mosaics + time-sync logs |

| Measurement (UT / CP) | No | NDT specialist | Calibration IDs + repeatability statistics |

| Class acceptance | No | Surveyor / Owner | Full traceable dossier (IDs, timestamps, calibration, closure) |

FAQs

Does AI change Class acceptance rules?

No. Acceptance is unchanged: time-coded media, scale, calibration, and qualified human sign-off remain mandatory.

What video quality is usable for AI?

Stabilized HD or 4K, consistent lighting, visible laser dots, and UTC timecode.

Can sonar outputs be accepted on their own?

For coverage proof—yes. Decisions require CVI or UT/CP follow-up.

How do we control false positives?

Publish P/R targets, cap false-positive budgets, and log reviewer overrides.

Where does AI-assisted 3D fail?

Heavy turbidity, reflections, moving growth, or poor angle diversity. If uncertainty is unclear, do not use it for decisions.

Deploy AI—Without Losing Class Acceptance

We configure AI screeners, enforce laser/lighting/time-sync discipline, and package findings into a traceable, audit-ready dossier—so you gain speed without sacrificing acceptance.

To execute this as an in-water program with acceptance-grade deliverables, use Underwater Inspection (ROV) via Inspection Services.